The Cerio Accelerated Computing Platform provides the foundation for agile systems designed for compute-intensive applications from visualization and simulation to analytics and AI.

Bring the power of accelerated computing to your data center. Continually evolve, immediately optimize and always maximize the availability of every resource.

Cerio is the only platform that delivers the promise of composability for full access to compute resources across the entire data center. Not a single server, not a single rack – the entire data center.

The Cerio Accelerated Computing Platform provides the foundation for agile systems designed for compute-intensive applications from visualization and simulation to analytics and AI.

The Cerio service management layer uses a declarative programming model to empower the rapid creation of Customer-Facing Services (CFS) and provide the front-end interface for orchestration. Services include:

The Cerio overlay services layer implements policy and configuration rules defined by service management to optimize the transport of different types of traffic and ensure full interoperability with existing system services. Administrators can define and manage traffic flows in software without being constrained by the underlying physical infrastructure.

Fabric Management: Overlay services and device chassis are managed in software using a standards-based composability data model.

Transport Adaptation: Native PCIe transport is decoupled from the underlay fabric to deliver robust systems at scale.

Hardened in high-performance computing, the Cerio underlay optimizes traffic flow across a high radix of optimal paths that share no common links, minimizing contention and congestion.

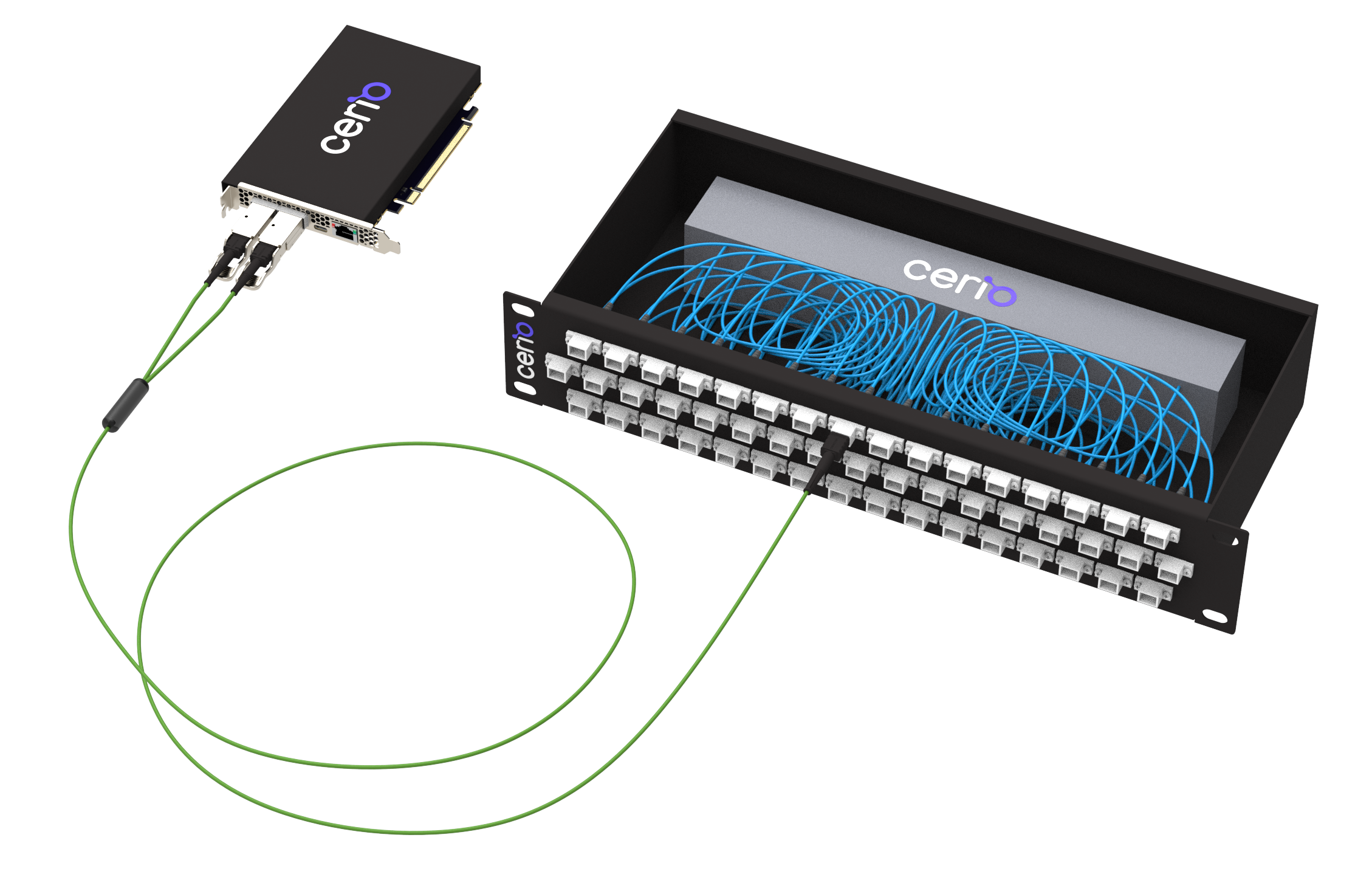

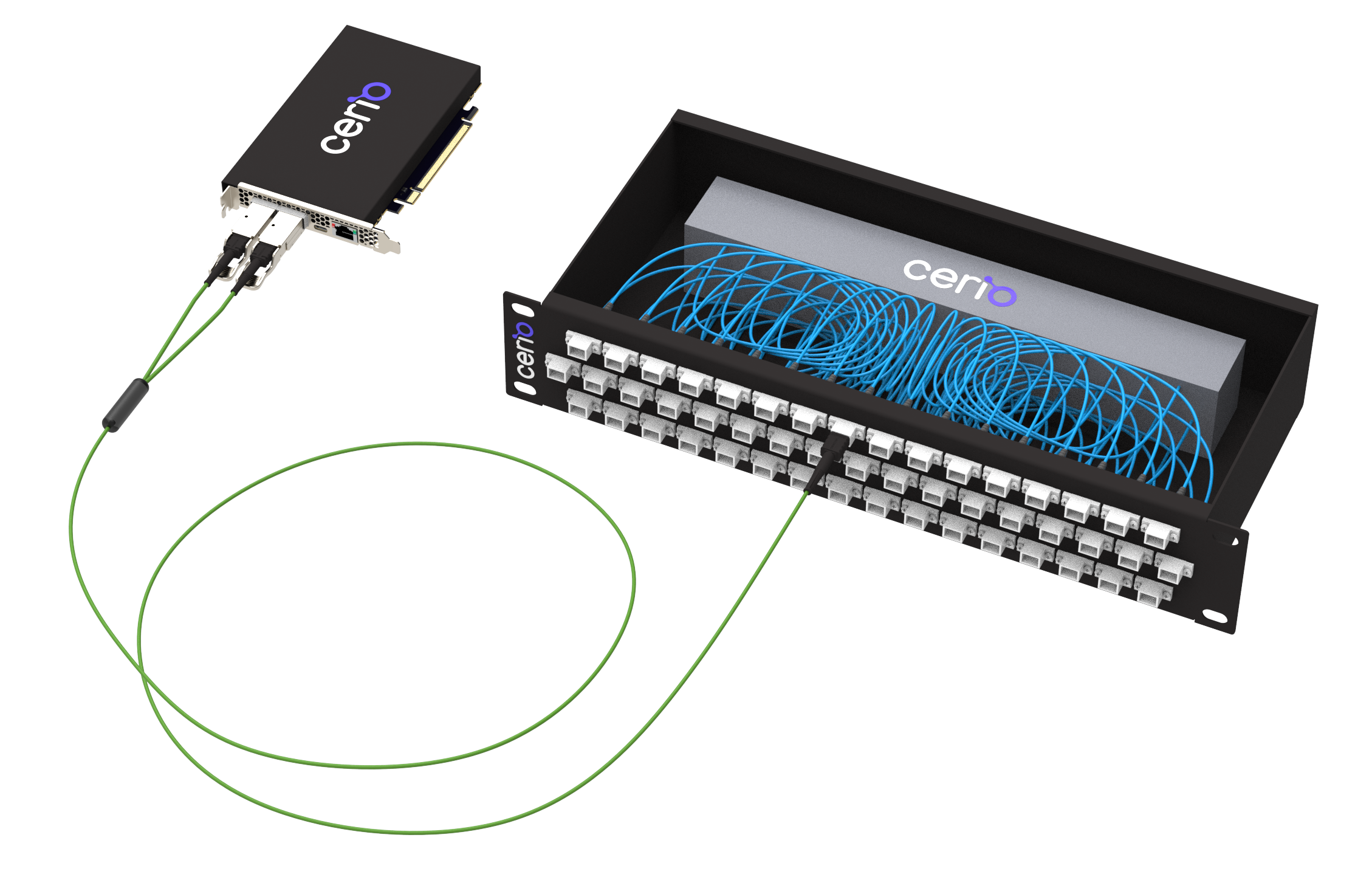

Fabric Node: Self-discovering, self-configuring and self-healing, the Fabric Node is installed in each server and chassis for fully distributed operation and direct connection to provide highly efficient data transport.

Fabric SHFL: Using a single optical cable to connect each Fabric Node to an optical port, the Cerio interconnect, called a SHFL, implements pre-wired topologies with no configuration and zero power and cooling.

The Cerio service management layer uses a declarative programming model to empower the rapid creation of Customer-Facing Services (CFS) and provide the front-end interface for orchestration. Services include:

The Cerio overlay services layer implements policy and configuration rules defined by service management to optimize the transport of different types of traffic and ensure full interoperability with existing system services. Administrators can define and manage traffic flows in software without being constrained by the underlying physical infrastructure.

Fabric Management: Overlay services and device chassis are managed in software using a standards-based composability data model.

Transport Adaptation: Native PCIe transport is decoupled from the underlay fabric to deliver robust systems at scale.

Hardened in high-performance computing, the Cerio underlay optimizes traffic flow across a high radix of optimal paths that share no common links, minimizing contention and congestion.

Fabric Node: Self-discovering, self-configuring and self-healing, the Fabric Node is installed in each server and chassis for fully distributed operation and direct connection to provide highly efficient data transport.

Fabric SHFL: Using a single optical cable to connect each Fabric Node to an optical port, the Cerio interconnect, called a SHFL, implements pre-wired topologies with no configuration and zero power and cooling.

Adaptive Multipath Routing: Traffic is distributed across physically independent paths. When congestion or failures are detected, traffic is automatically routed to different paths. Configurable QoS ensures that high priority traffic is always serviced first.

Distributed FLIT Switching: PCIe transaction layer packets (TLPs) are segmented into variable-sized packages, called FLITs, at each source and forwarded to the destination along optimal virtual channels embedded in each Fabric Node.

Link and Path Reliability: Lossless delivery guarantees for PCIe links are provided using inter-node buffer credits, underlay link-level retransmissions, and end-to-end monitoring and retransmission services.

Create GPU density clusters by connecting up to 64 GPUs to a host, scale up, down or out to fit your GPU infrastructure.

Build systems on the fly and compose in seconds, while reducing power, cooling, rack space and cabling.

Hotswap failed hardware in real time using software, without time-consuming manual upgrades or impact on service or production availability.

Maximize per-application data flows to optimize the performance of your infrastructure for AI, machine learning and deep learning.

Use any hardware device from any vendor or generation with full hardware heterogeneity.

AI is driving demand for GPUs and specialized accelerators to manage the complex processing demands for new applications and services. Modeling the available GPU capacity in your data center – and what you can actually access – is critical for planning the cost-effective scale of your AI infrastructure.

Distributed by Design. Calibrated for Composable Infrastructure.

The PCIe scale barrier is placing limits on traditional and composable infrastructure, adding cost and complexity. PCIe decoupling technology in the Cerio open systems platform removes the single PCIe domain limit to enable elastic scale and agility for highly optimized and cost-effective AI infrastructure.